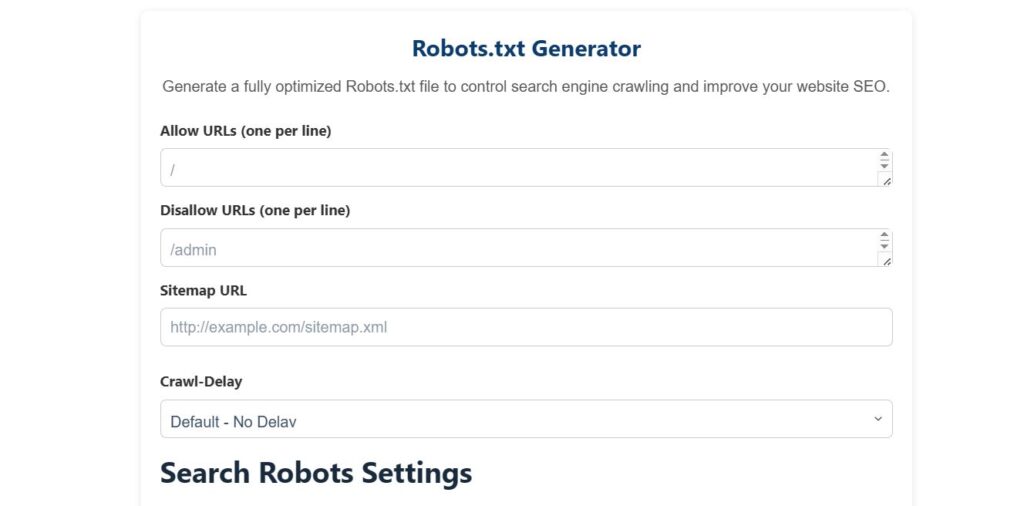

Robots.txt Generator

Generate a fully optimized Robots.txt file to control search engine crawling and improve your website SEO.

Search Robots Settings

Robots.txt Generator | Free Online Tool for SEO Optimization

If you run a website, you already know how important SEO is for attracting traffic and improving search engine rankings. One of the simplest yet most powerful tools for website optimization is a Robots.txt Generator. This tool helps you create a robots.txt file quickly, ensuring that search engines crawl the right pages and ignore the ones you don’t want to show.

What is a Robots.txt File?

A robots.txt file is a plain text file placed in the root directory of your website. Its primary function is to communicate with search engines such as Google, Bing, and Yahoo. The file tells crawlers which pages or folders they are allowed to visit and which pages they should skip. Using a Robots.txt Generator makes this process simple and error-free.

Without a robots.txt file, search engines might crawl pages you don’t want indexed, such as admin panels, private content, or duplicate pages. This can negatively affect your SEO performance. A correctly generated robots.txt file can help manage crawler activity effectively.

Why Do You Need a Robots.txt Generator?

Creating a robots.txt file manually can be tricky, especially for large websites with multiple sections. Errors in the file could block important pages from being indexed, reducing your site’s visibility. A Robots.txt Generator automates this process. You simply select which pages to allow or block, and the tool generates a correct file for you.

Using this generator is especially helpful for:

New website owners who are unfamiliar with coding.

SEO professionals managing multiple websites.

Anyone who wants to save time and avoid errors in robots.txt.

Benefits of a Robots.txt File for SEO

A robots.txt file can have a big impact on your SEO efforts. Here’s why:

Control Crawling: You can decide which parts of your website search engines should crawl, preventing unnecessary indexing.

Protect Sensitive Content: Block access to private pages such as login areas, admin dashboards, or staging versions of your site.

Prevent Duplicate Content Issues: Avoid multiple versions of the same page being indexed, which can harm rankings.

Improve Website Performance: By limiting crawler access to unnecessary pages, your website can load faster and perform better.

Enhance SEO Strategy: Focus search engines on your most important content, improving chances of ranking higher.

A Robots.txt Generator simplifies all of these benefits, allowing even beginners to optimize their site effectively.

How to Use a Free Robots.txt Generator

Using a free Robots.txt Generator is straightforward:

Open the tool in your browser.

Enter the URLs or directories you want to allow or block.

Select the search engines to which the rules will apply.

Click “Generate” and download your optimized robots.txt file.

It’s that simple. No coding skills are required, and you can instantly improve your website’s SEO setup.

Best Practices When Using Robots.txt

While a Robots.txt Generator makes creating a file easy, following best practices is crucial:

Double-check your file: Ensure no important pages are accidentally blocked.

Keep it updated: Whenever you add or remove pages, update the robots.txt file.

Combine with other SEO tools: Tools like sitemap generators and meta tag creators work well alongside robots.txt for full website optimization.

Test the file: Google Search Console allows you to test robots.txt to make sure it works correctly.

Common Mistakes to Avoid

Many website owners make mistakes that hurt their SEO. Here are a few common pitfalls:

Blocking the entire website accidentally.

Forgetting to allow CSS or JavaScript files needed for rendering pages.

Ignoring the robots.txt for subdomains.

Using a Robots.txt Generator minimizes these errors, giving you confidence that your SEO is in safe hands.

Real-Life Examples of Using Robots.txt

Imagine you run an e-commerce site. You may want to block pages like checkout, cart, or account settings from being indexed. At the same time, you want search engines to index product pages, categories, and blogs. By generating a robots.txt file, you can clearly define these rules and prevent private areas from appearing in search results.

For blogs, you might block draft posts or author pages you don’t want indexed. For portfolio sites, you can block backend directories. A Robots.txt Generator helps handle all these scenarios easily.

Final Thought

A Robots.txt Generator is a must-have tool for anyone serious about SEO. It saves time, reduces mistakes, protects sensitive pages, and ensures search engines crawl your website efficiently. By using this tool, you can focus on creating high-quality content while making sure your site is optimized behind the scenes.

Start using a free Robots.txt Generator today and take your website SEO to the next level. It’s simple, fast, and extremely effective for all types of websites.

Related Posts

Meta Tag Generator

Sitemap Generator

Keyword Research Tools